Immersive Marketing Insights | 08/05/19

This week we focus on [AR]T — big tech and galleries are collaborating to advance augmented reality art. We also look at how Snapchat works with brands and how Adobe rethinks the in-store retail experience. Take a peek at our insights.

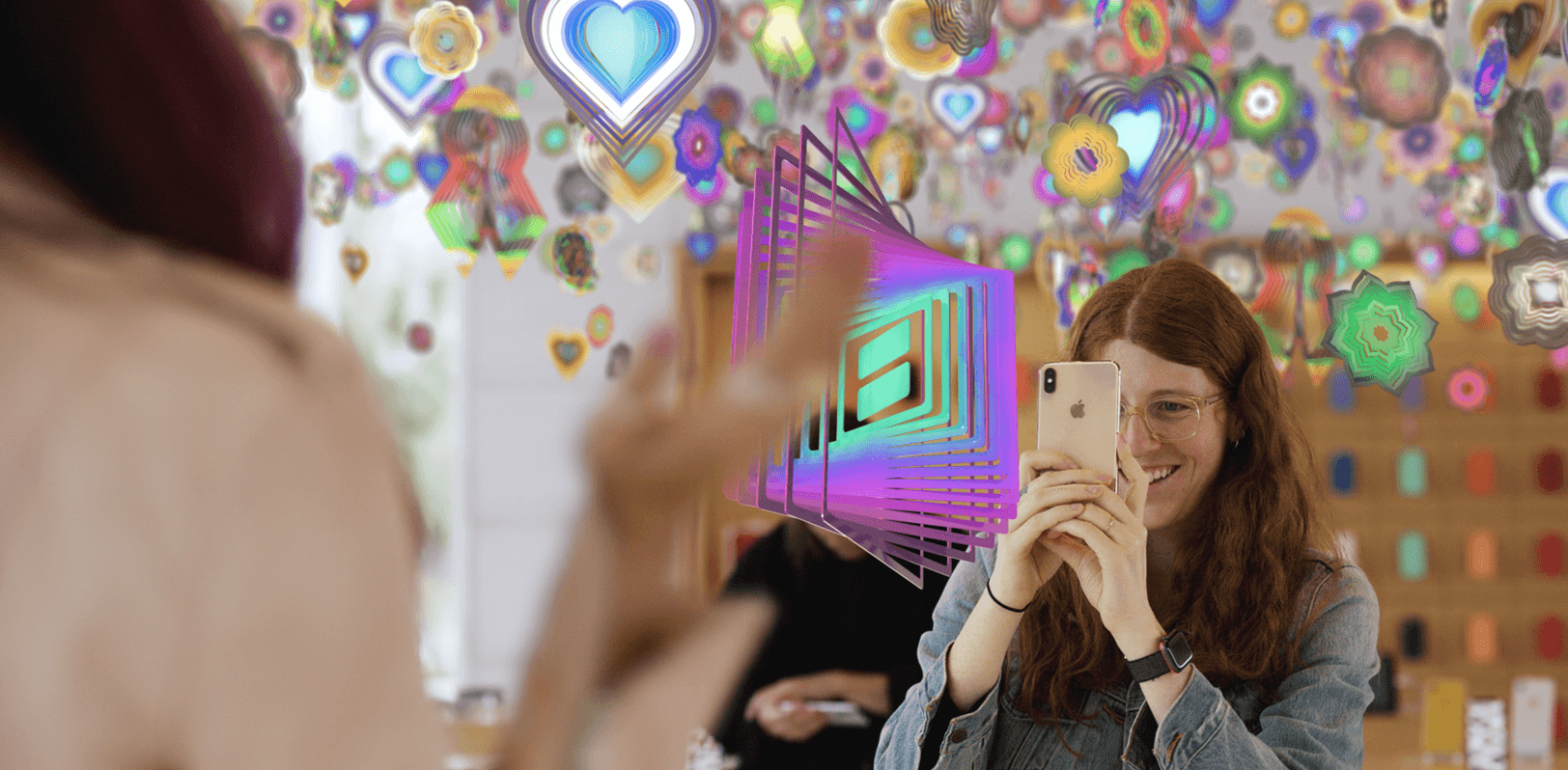

Apple unveils augmented reality art initiative

Apple consistently casts votes of confidence for augmented reality: the latest Apple [AR]T announcement is a windfall for AR creatives. The exciting initiative takes three forms. [AR]T Walks are interactive guided paths through a series of seven immersive AR artworks. You can register for one in Hong Kong, London, New York, Paris, San Francisco, and Tokyo. [AR]T Labs are workshops in Apple Stores teaching customers to make their own augmented experiences with Swift Playground for iPad. [AR]T at the Apple Store are installations in every one of their retail spots worldwide, featuring an AR piece by Nick Cave. The project showcases how renowned contemporary artists sharpen their cutting edge with AR. In collaboration with the New Museum, Apple opened registration for these [AR]T experiences this week.

Read more: Today at Apple, Apple newsroom

More [AR]T: Tate Museum and Instagram collaborate on augmented exhibition

Evidently AR has found a home in the art world: Apple [AR]T, Google pocket galleries, exhibitions at the Art Institute of Chicago, and so much more. This summer, Instagram and the Tate museum in London have collaborated on another next gen art project: “The Virtual Wing: Powered by Spark AR.” Enter the museum, open the Instagram camera, and scan the Tate’s instagram name tag. Visitors are met with a 3D map of the museum to help them navigate around the 8 paintings in the gallery that are AR-enabled. All you have to do is point the camera at the paintings to see them animate and reveal accompanying information. Built on Spark AR (the creator studio used to build Instagram experiences), the Tate project is an early use case of the tech competing with Snapchat’s Lenses. Facebook is betting big on contexually-relevant and socially-oriented AR. And you should too.

Read more: Hypebeast, Facebook annoucement (GIF)

59 percent of developers’ current or potential VR and AR projects fall in the gaming space, says WIRED survey

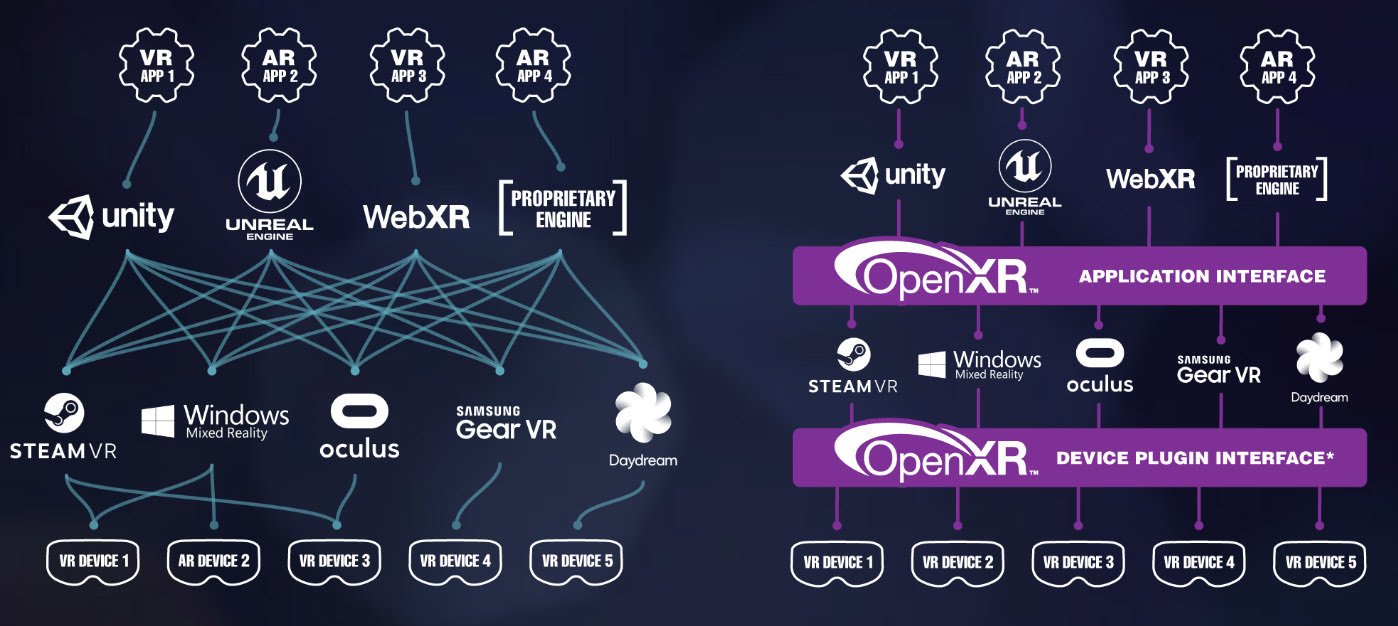

One step closer to cross-platform immersive experiences: Industry signs onto OpenXR 1.0

Khronos Group has the super glue for the fragmented VR/AR industry. The newly released OpenXR 1.0 specification aims to set standards for immersive development that will ensure deployment across all platforms: Steam, Windows MR, Oculus, Samsung Gear, Daydream, etc. Its adoption depends on each of the tools in the immersive workflow individually integrating the standard. Companies like Google, HP, Huawei, Intel, LG, Magic Leap, Samsung, Unity, and Valve (and more) have signed onto the new system promising a “truly vibrant, cross-platform standard for XR platforms and devices.” What this means is that any app developed for HTC Vive will automatically be playable on Oculus Quest, or that Google ARCore experience can be activated with ease on Huawei devices. Notably absent from the list of signed-on parties is Apple. This is a huge step for our nascent industry: content can flow freely between form factors and reach the widest possible audience.

Read more: wccftech, Road to VR

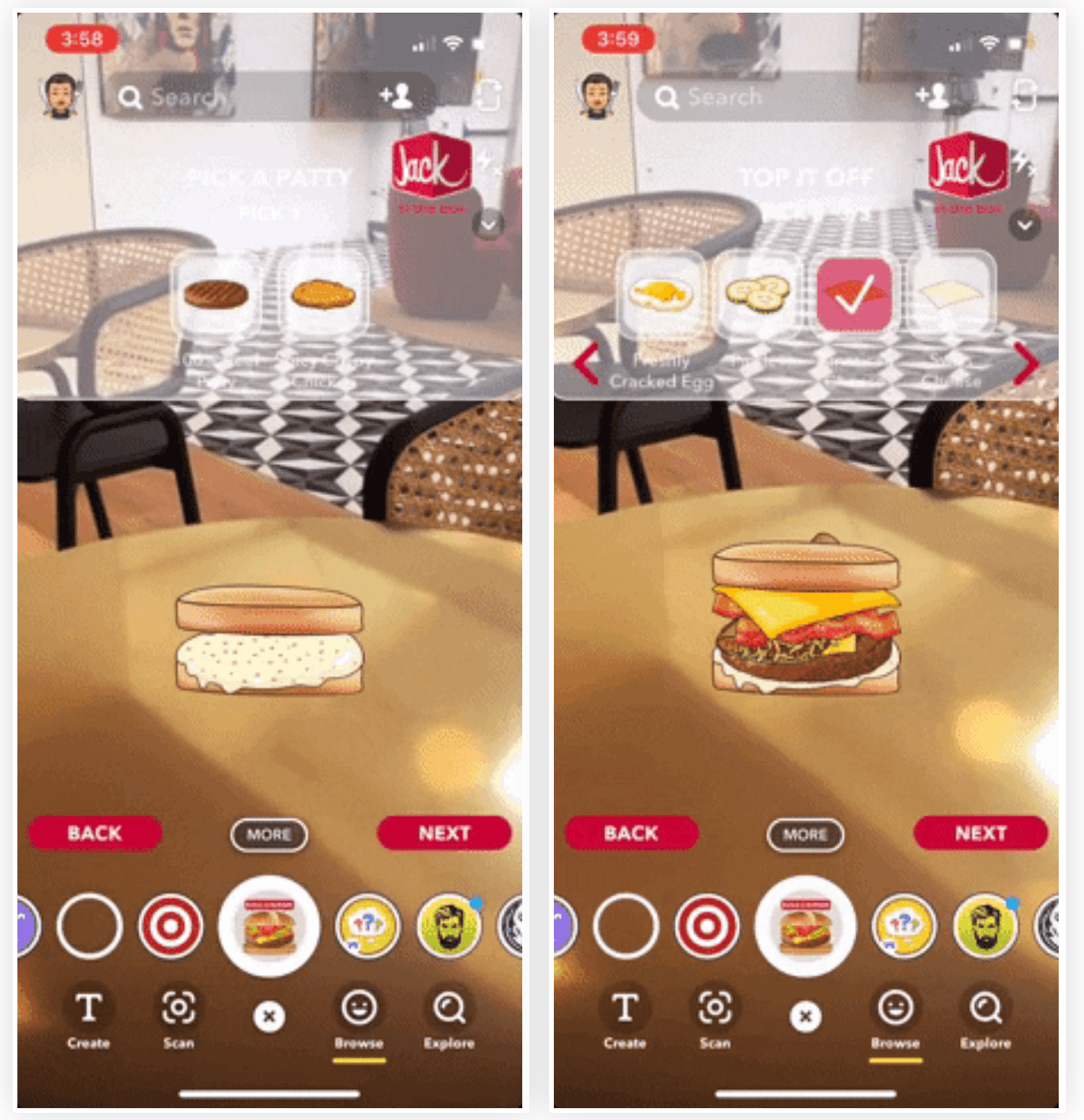

Snapchat AR lenses flex strength as marketing tools

Jack in Box unveiled an AR lens twist on traditional sweepstakes: play the BurgAR game on Snapchat and earn the chance to win prizes. Mix and match burger ingredients, record videos of floating burgers, and enter the sweepstakes all within one Snapchat filter. “With their unholy sandwich prepared, users are then asked to save a picture, return to the Lens, and click “More” (thanks to Snap’s Shoppable AR e-commerce platform) to navigate to the contest website.”

Read more: Next Reality (GIF)

Adobe Project Glasswing imagines transparent MR computer screens

Adobe has dreamed up an entirely new interface for MR: a transparent, touch-interactive glass display. Create digital media assets in tools like After Effects or Photoshop and port them onto the external display. “By controlling the opacity and emissive color of each pixel independently, the display acts like a conventional monitor or could have transparent windows to see physical objects.” Think about it like a low opacity Photoshop layer: this effort qualifies as mixed reality in its pursuit of digital/physical integration. Announced at SIGGRAPH, this tech is an alternative to high-end MR wearables, next-gen smart glasses, or even the smartphone camera. Adobe imagines the MR interface as this see-through interactive display needing no special lenses. Imagine use cases for in-store advertising, museum exhibitions, and educational lessons. Watch the video to truly grasp this disruptive technology.

Read more: Adobe Blog

New Yorkers!

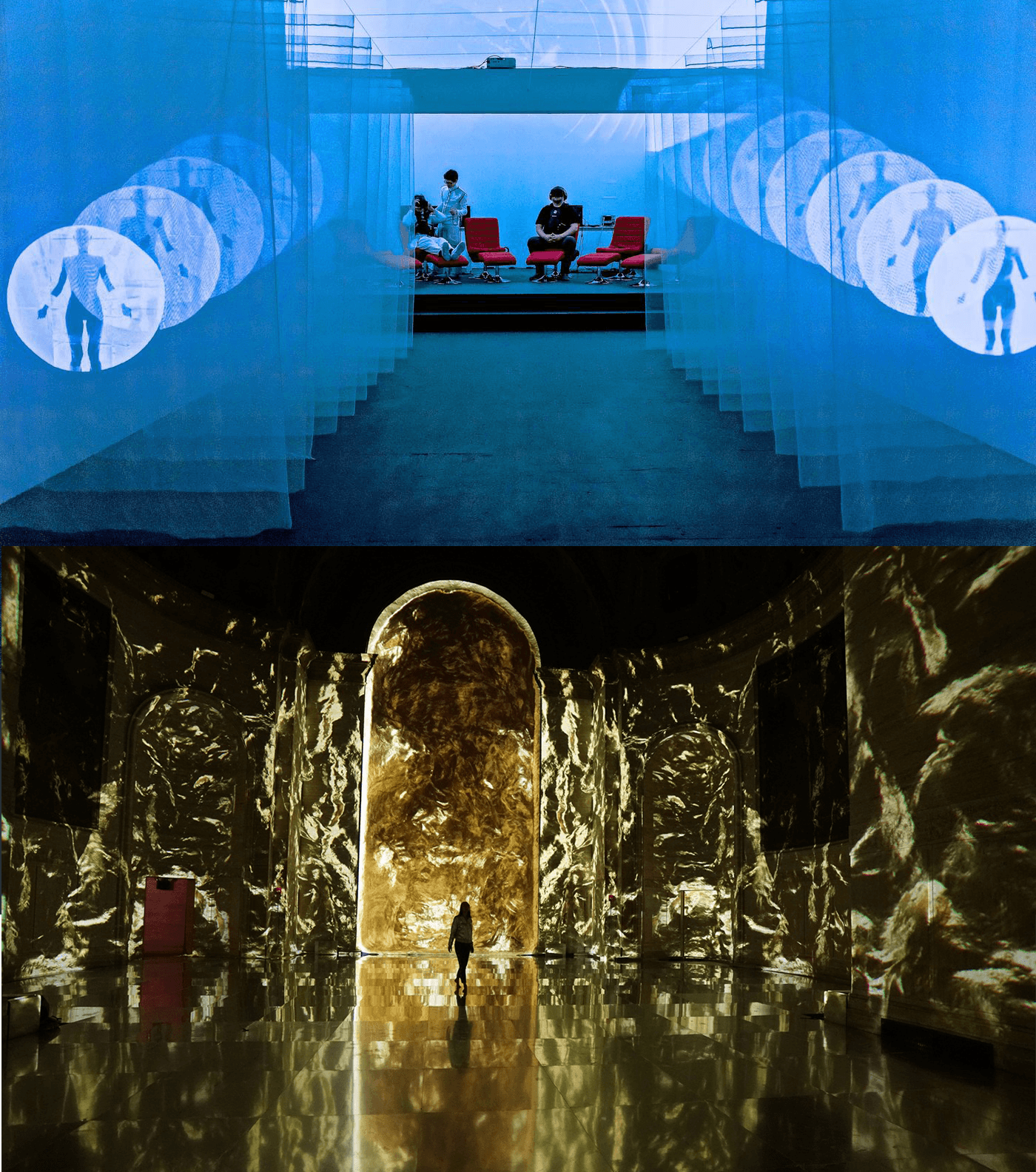

Go see new immersive exhibitions at the Museum of Future Experiences and Cipriani 25 Broadway.

The MoFE exhibition is “a curated cerebral experience blending immersive theater, psychology, and virtual reality for an intimate exploration of individual and collective consciousness.” It’s funded by prestigious tech accelerator Y Combinator; tickets, which are purchased ahead of time, are $50 for an hour. Read more on Bloomberg.

In August, the downtown Cipriani 25 Broadway event space will host “SuperReal,” an immersive digital art exhibition created by multimedia production studio Moment Factory. Inspired by the architecture and history of the landmarked building, the Montreal-based studio has created an experiential installation exploring the intersection of new and old technologies. Read more at Galerie Magazine.

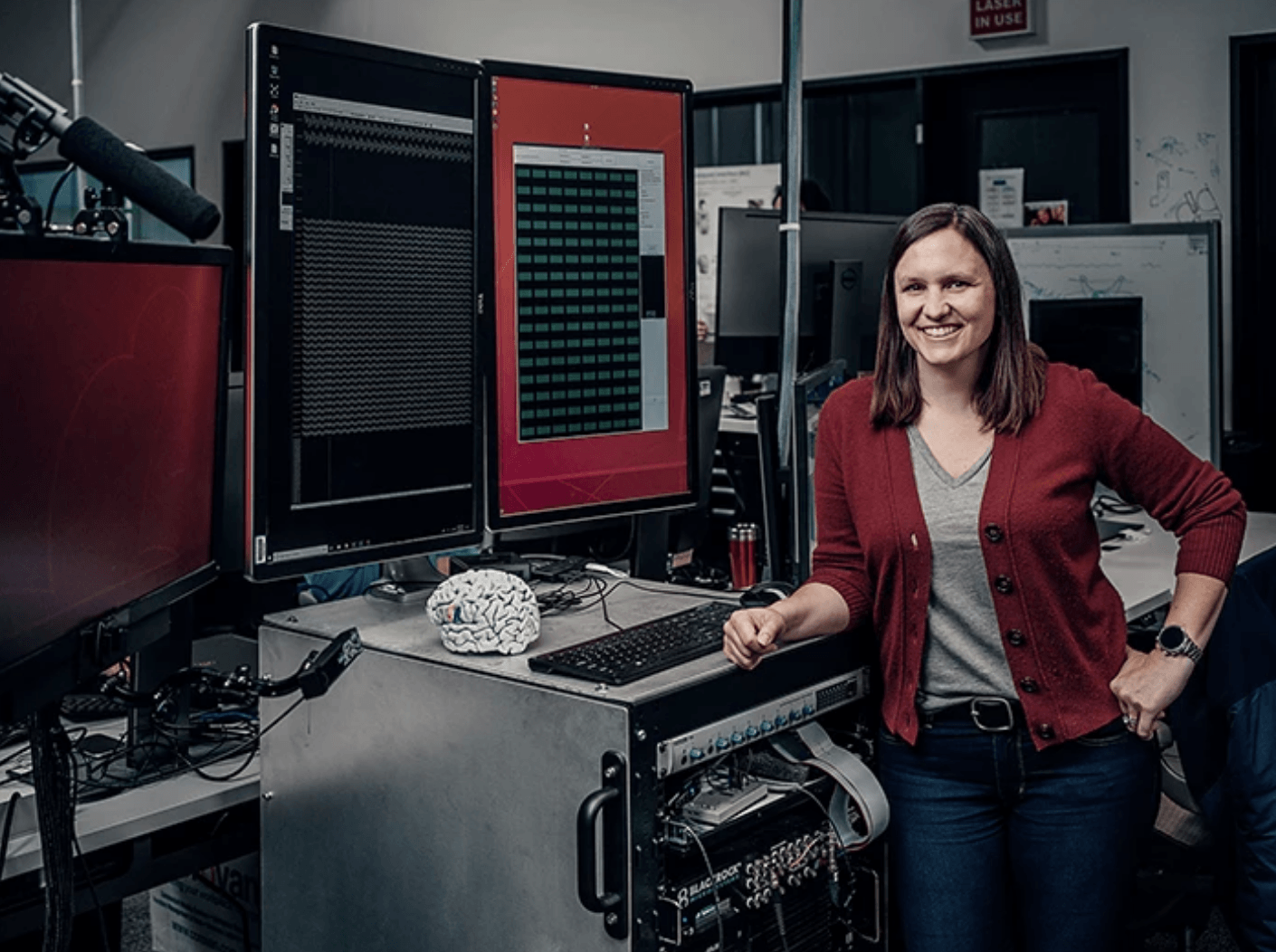

Soon you’ll be able to type with your brain. Thanks, Facebook?

Facebook Reality Labs has been refreshingly public about their disruptive research. The latest leak — and by that we mean polished and published blog post — is advanced development of “a non-invasive, wearable device that lets people type by simply imagining themselves talking.” The idea was first hatched at an F8 conference in 2017, but the lan has made extensive strides toward building the brain computer interface (BCI) into its pair of stylish augmented reality glasses. The research, conducted in tandem with the University of California, San Francisco, works to help patients suffering from neurological damage speak by tracking brain activity and translating it into speech in real time. The algorithm in its current stage can only detect and recognize a small set of words and phrases. Ultimately, the researchers hope to decode 100 words per minute in real time with a 1,000-word vocabulary and error rate of less than 17%. Facebook believes that “we’re standing on the edge of the next great wave in human-oriented computing, one in which the combined technologies of AR and VR converge and revolutionize how we interact with the world around us — as well as the ways in which we work, play, and connect.”

Read more: UploadVR, Facebook tech blog.